python解析网页上的json数据并保存到EXCEL

更新时间:2024年11月14日 11:32:17 作者:脸ル粉嘟嘟

这篇文章主要为大家详细介绍了如何使用python解析网页上的json数据并保存到EXCEL,文中的示例代码讲解详细,感兴趣的可以了解下

安装必要的库

import requests import pandas as pd import os import sys import io import urllib3 import json

测试数据

网页上的数据结构如下

{

"success": true,

"code": "CIFM_0000",

"encode": null,

"message": "ok",

"url": null,

"total": 3,

"items": [

{

"summaryDate": "20240611",

"summaryType": "naturalDay",

"workday": true,

"newCustNum": 1,

"haveCustNum": 1691627,

"newAccountNum": 2,

"haveAccountNum": 1692934,

"totalShare": 4947657341.69,

"netCash": -3523387.25,

"yield": 0.01386

},

{

"summaryDate": "20240612",

"summaryType": "naturalDay",

"workday": true,

"newCustNum": 5,

"haveCustNum": 1672766,

"newAccountNum": 5,

"haveAccountNum": 1674071,

"totalShare": 4927109080.29,

"netCash": -20735233.55,

"yield": 0.01387

},

{

"summaryDate": "20240613",

"summaryType": "naturalDay",

"workday": true,

"newCustNum": 4,

"haveCustNum": 1662839,

"newAccountNum": 5,

"haveAccountNum": 1664146,

"totalShare": 4927405885.59,

"netCash": 110659.8,

"yield": 0.01389

}

],

"data": null,

"info": null

}

详细逻辑代码

import requests

import pandas as pd

import os

import sys

import io

import urllib3

import json

urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

sys.stdout = io.TextIOWrapper(sys.stdout.buffer, encoding='utf-8')

url = "https://ip/ma/web/trade/dailySummary?startDate={pi_startdate}&endDate={pi_enddate}"

headers = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7",

"Accept-Language": "zh-CN,zh;q=0.9",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36 Edg/119.0.0.0",

}

def save_data(data, columns, excel_path, sheet_name):

df = pd.DataFrame(data, columns=columns)

if not os.path.exists(excel_path):

df.to_excel(excel_path, sheet_name=sheet_name, index=False)

else:

with pd.ExcelWriter(excel_path, engine='openpyxl', mode='a') as writer:

df.to_excel(writer, sheet_name=sheet_name, index=False)

def json2list(response_text):

# 把json数据转化为python用的类型

json_dict = json.loads(response_text)

src_total = json_dict["total"]

print("src_total: {}".format(src_total))

items = json_dict["items"]

excel_columns = ['summaryDate',

'summaryType',

'workday',

'newCustNum',

'haveCustNum',

'newAccountNum',

'haveAccountNum',

'totalShare',

'netCash',

'yield'

]

excel_data = []

# 使用XPath定位元素并打印内容

for item in items:

excel_row_data = []

for column_index in range(len(excel_columns)):

data = str(item[excel_columns[column_index]])

if excel_columns[column_index] == 'workday':

data = str(0 if data == "False" else 1)

excel_row_data.append(data)

excel_data.append(excel_row_data)

trg_total = len(excel_data)

# 稽核

print("trg_total: {}".format(trg_total))

vn_biasval = trg_total - src_total

if vn_biasval != 0:

print("This audit-rule is not passed,diff: {}".format(vn_biasval))

exit(-1)

else:

print("This audit-rule is passed,diff: {}".format(vn_biasval))

return excel_columns, excel_data

if __name__ == '__main__':

try:

excel_path = "C:/xxx/temp/ylb_dailySummary_{pi_startdate}_{pi_enddate}.xlsx"

sheet_name = 'result_data'

pi_startdate = 20240611

pi_enddate = 20240613

excel_path = excel_path.format(pi_startdate=pi_startdate, pi_enddate=pi_enddate)

url = url.format(pi_startdate=pi_startdate, pi_enddate=pi_enddate)

print("url:{}".format(url))

print("excel_path:{}".format(excel_path))

response_text = requests.get(url, headers=headers, timeout=(21, 300), verify=False).content.decode("utf8")

excel_columns, excel_data = json2list(response_text)

print("=================excel_columns=======================")

print(excel_columns)

print("=================excel_data==========================")

for x in excel_data:

print(x)

print("=====================================================")

# 文件存在,则删除

if os.path.exists(excel_path):

os.remove(excel_path)

# 保存文件

save_data(excel_data, excel_columns, excel_path, sheet_name)

print("save_data is end.")

except Exception as e:

print("[ERROR]:" + str(e))

exit(-1)

代码解析

1.请求头

构造请求头

urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

sys.stdout = io.TextIOWrapper(sys.stdout.buffer, encoding='utf-8')

url = "https://ip/ma/web/trade/dailySummary?startDate={pi_startdate}&endDate={pi_enddate}"

headers = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7",

"Accept-Language": "zh-CN,zh;q=0.9",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36 Edg/119.0.0.0",

}

2.数据保存到excel

如果excel已经存在,那么则会将数据追加到excel中

def save_data(data, columns, excel_path, sheet_name):

df = pd.DataFrame(data, columns=columns)

if not os.path.exists(excel_path):

df.to_excel(excel_path, sheet_name=sheet_name, index=False)

else:

with pd.ExcelWriter(excel_path, engine='openpyxl', mode='a') as writer:

df.to_excel(writer, sheet_name=sheet_name, index=False)

解析json数据获取字段名称以及对应的数据list列表

def json2list(response_text):

# 把json数据转化为python用的类型

json_dict = json.loads(response_text)

src_total = json_dict["total"]

print("src_total: {}".format(src_total))

items = json_dict["items"]

excel_columns = ['summaryDate',

'summaryType',

'workday',

'newCustNum',

'haveCustNum',

'newAccountNum',

'haveAccountNum',

'totalShare',

'netCash',

'yield'

]

excel_data = []

# 使用XPath定位元素并打印内容

for item in items:

excel_row_data = []

for column_index in range(len(excel_columns)):

data = str(item[excel_columns[column_index]])

if excel_columns[column_index] == 'workday':

data = str(0 if data == "False" else 1)

excel_row_data.append(data)

excel_data.append(excel_row_data)

trg_total = len(excel_data)

# 稽核

print("trg_total: {}".format(trg_total))

vn_biasval = trg_total - src_total

if vn_biasval != 0:

print("This audit-rule is not passed,diff: {}".format(vn_biasval))

exit(-1)

else:

print("This audit-rule is passed,diff: {}".format(vn_biasval))

return excel_columns, excel_data

3.测试方法入口

if __name__ == '__main__':

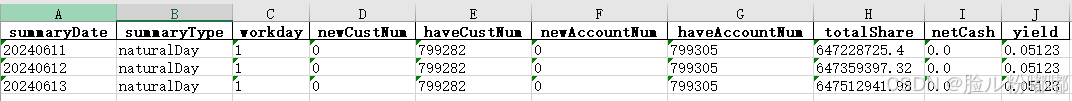

测试结果

会生成ylb_dailySummary_20240611_20240613.xlsx文件

以上就是python解析网页上的json数据并保存到EXCEL的详细内容,更多关于python解析网页json数据的资料请关注脚本之家其它相关文章!

最新评论